For the final project, I decided to implement the Neural Style Transfer as well as the Lightfield Camera

Part A: Neural Style Transfer

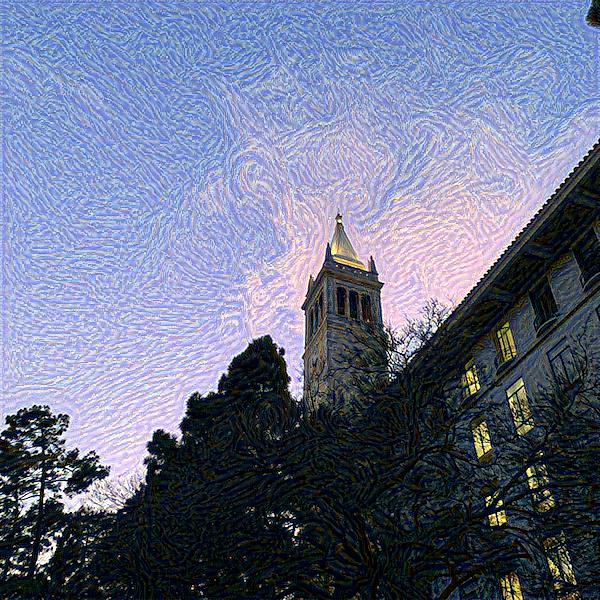

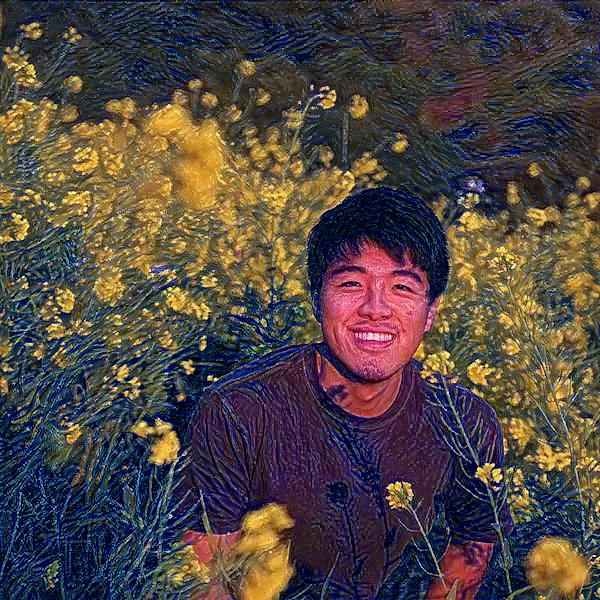

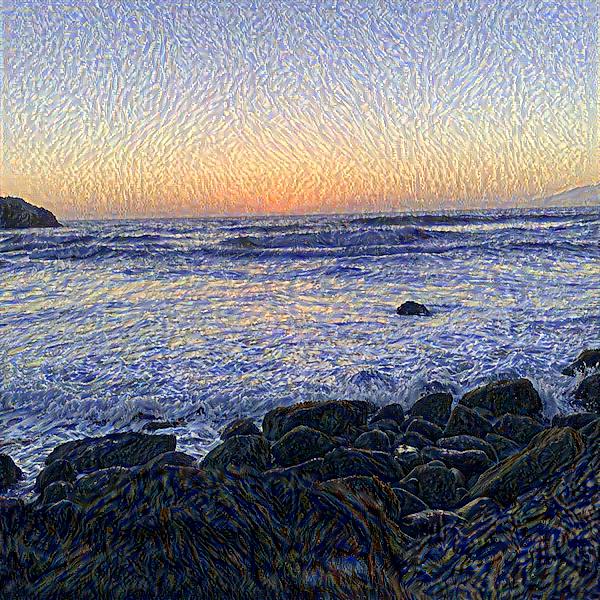

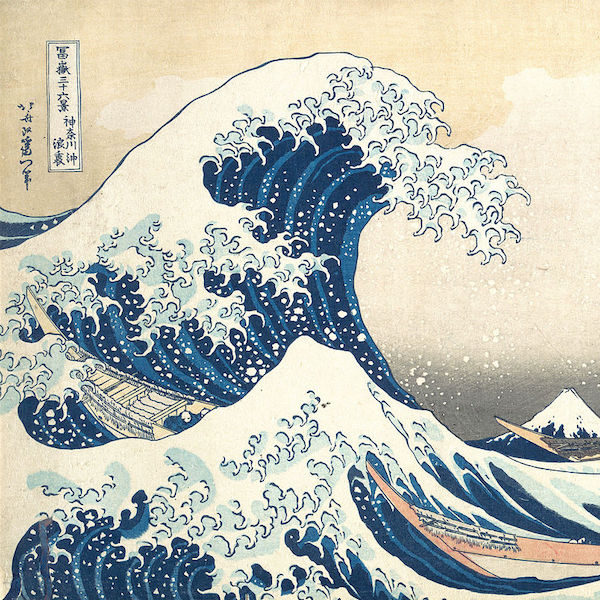

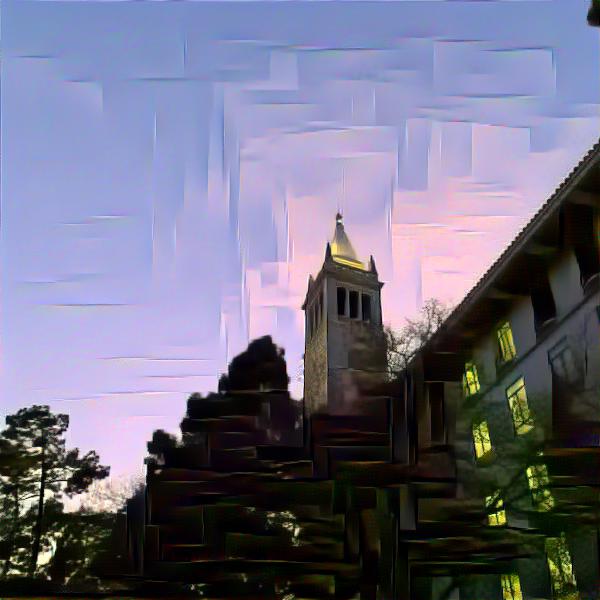

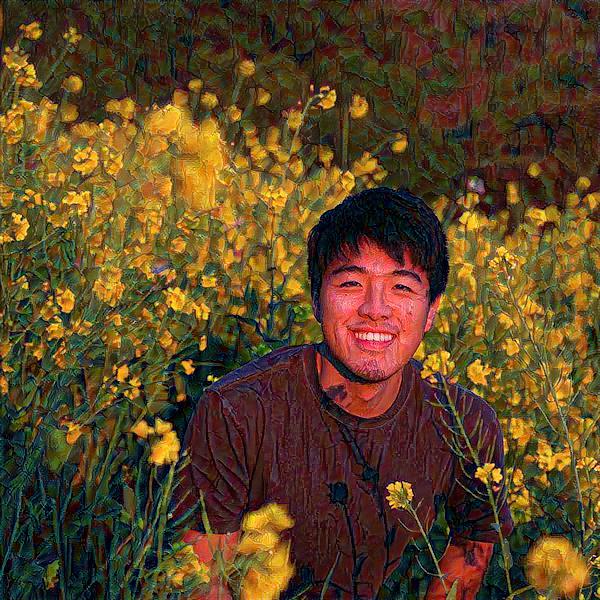

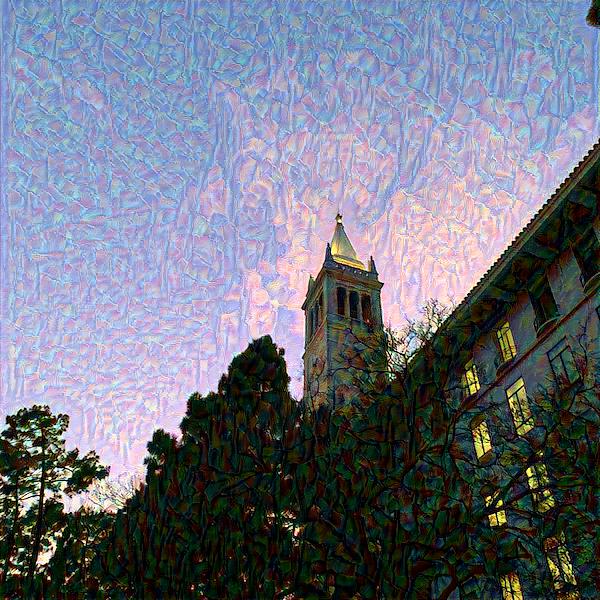

The neural style transfer project has been easily one of my most favorite projects from this semester. For this project, we had to reimplement this paper by Leon A. Gatys, Alex S. Ecker and Matthias Bethge. The essence of the algorithm is to minimize a two component loss which can be split out in a content loss and a style loss by running backprop on the desired output image. For the model, you are supposed to use some certain pre-determined layers of the VGG-19 CNN. The layers of interest that were essential for the style loss were CNN1_1, CNN2_1, CNN3_1, CNN4_1 and CNN5_1. Where we calculate the loss through taking MSE loss between the gram matrix of our target features and our current features. For the content loss, we take the MSE Loss between the target and input image. These yield these amazing results after performing hyperparameters. Some limitations, definitely, were the that in case of pictures with small to no textural patterns, there is little to no difference observable.

Van Gogh

The Great Wave off Kanagawa

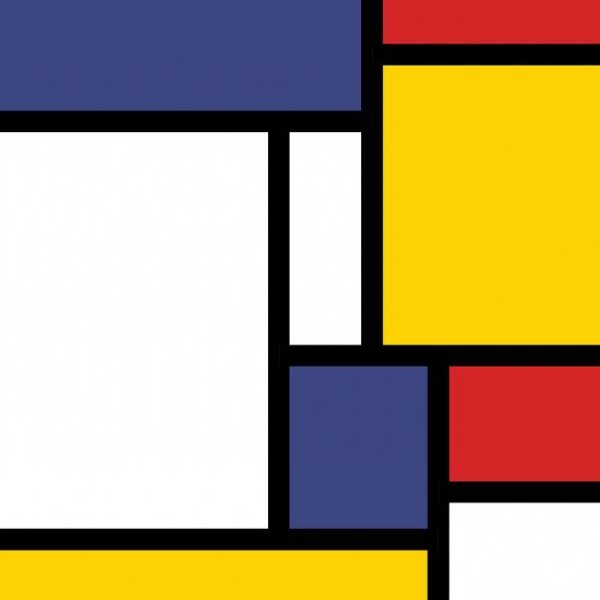

Piet Mondriaan

Leonid Afremov

Puzou

Part B: Lightfield Camera

For the lightfield camera, we were given a range of 17 x 17 grid pictures. Due to slightly different rays that are coming in to those camera positions, we can adjust the focus and the aperture. The focus can be adjusted through taking the average of the photos which are slightly shifted from the center camera with a certain stride. The results can be seen here below:Lessons Learned

I think the main thing I learned from doing this project is how easy it actually is to change the aperture and focus when you have a wide range of grid photos. You can just simply do some sampling or translations. Nonetheless, the downsides also became clear, namely, you have to save huge number of photos which turned out apparent in the large file size I had to submit for this proejct.

Bells and Whistles: Hammer and why manual data collection fails

I chose to do the bells and whistles that pertains to collecting your own data. For that purpose, I captured 25 pictures in a 5 x 5 grid of my hammer on my dinner table. Upon inspection, we see that the photos turn out extremely blurry. This very likely has to do with the failure for my manual photo capturing of the photos to mimic the function as a grid. The spacings are too inconsistent and to large for a real lightfield camera for example. As a consequence, when we take the average, we see that the hammer disappears, which is indeed the case in the GIFs above.